UL

UL, formerly Futuremark, produces the industry's most trusted and widely used performance tests for PCs, tablets, smartphones, and VR systems.

Business Goal

UL’s mission is to provide insight on a device and how the performance is impacted by use and environment.

Challenge

Create a product that offers a complete report with comparable results regarding a laptop’s battery life.

"The important point of this comparison is that battery life varies dramatically depending on how the system is used."

REsearch

When evaluating the performance of laptops, our users noted battery life as one of the most important attributes. Prior to designing the battery life tests into PCMark 10, the benchmark that measures the performance of PCs with modern office workloads, we investigated how our users measured battery life. From our research, we were able to conclude that most users ran their own battery tests based on specific scenarios and some ran the battery test in our previous benchmark, PCMark 8. This brought different battery life results depending on the workload and settings, which made it difficult for the consumer to select which battery life result to trust.

Due to different test scenarios, the battery life on laptops were not comparable.

Competitive Analysis

Understanding the impact of different scenarios and needs of our users, we compared our test with our competitor to figure out how we may improve our product. The main focus here was to recognize what the main use cases of an individual were and how we can create accurate tests to measure the battery life of those cases.

Distinguishing which scenarios were offered allowed us to improve our test to paint a complete picture of a device’s battery life.

DESIGN

Designing for battery life meant understanding that there was no single test a user could run to gain an overall comprehension of their laptop’s battery life. The battery life of a device, from full charge to power down, was impacted by how a user used their laptop. Understanding this, the team created four battery benchmarks: Modern Office, Idle, Gaming, and Video.

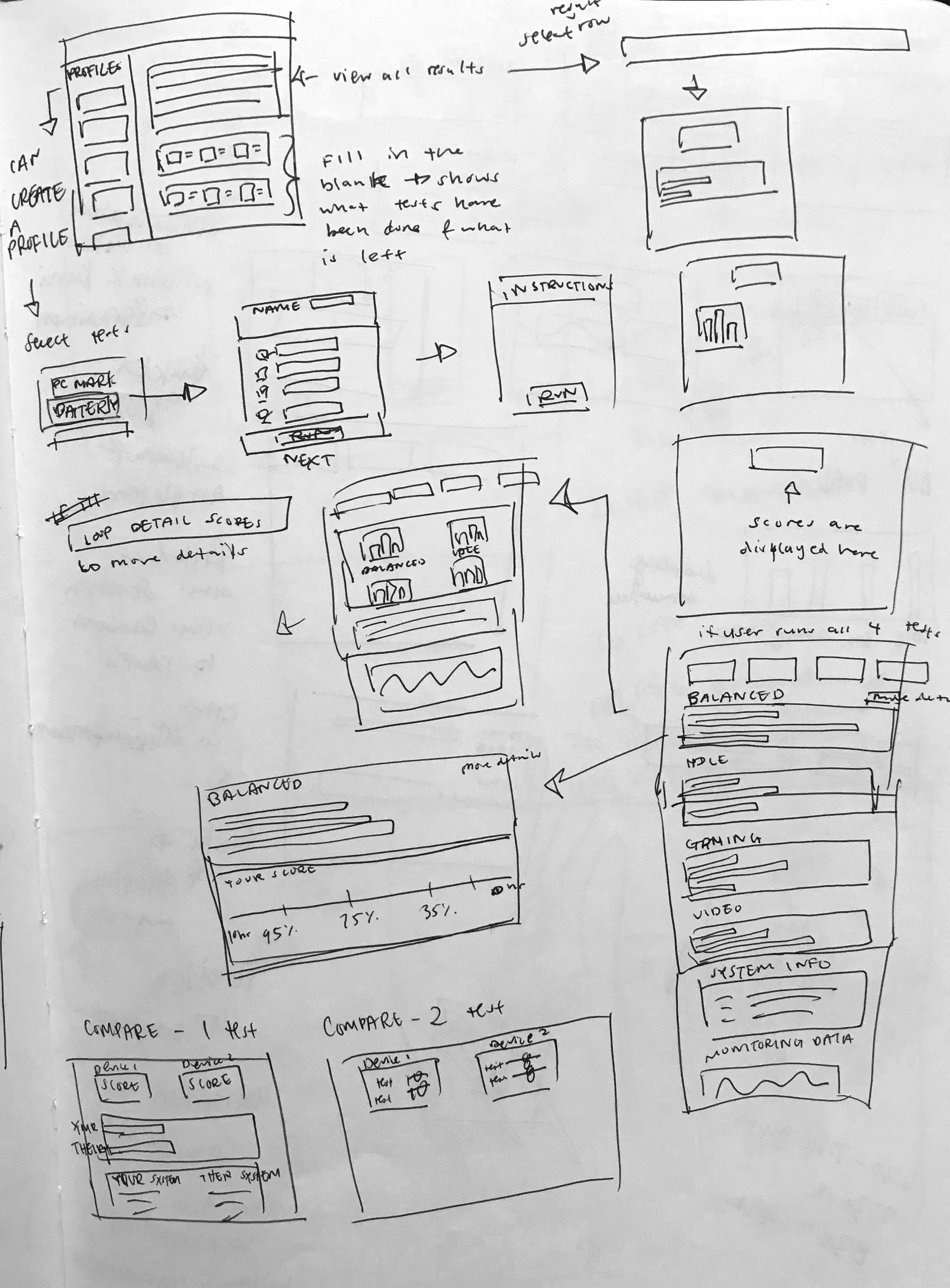

The main task during ideation was to design a flow that would make a user understand the importance of running all four tests to produce a battery profile, the complete story of their battery life based on activity.

Once a wireframe flow was created that aligned with the goal, I digitized the wireframes for a quick user test to understand how users interpreted each screen.

Users did not understand the concept of a profile and how it tied together with the tests below.

The details on the left provided more insight on the tests, but the right section was confusing.

All users understood the functionality of this screen.

The participants did not understand what a profile was and what the percentages for a quick run meant (on the benchmark description screen.) Reducing the complexity of the content, I created two variations of the benchmark selection screen and conducted an A/B test.

Feedback: Clearly states what each option offers, less overwhelming with choices. A lot of text to read.

Feedback: Clear actions, less reading, visually appealing. Run button is not prominent.

Analyzing the feedback from the A/B test, we reflected on the main objective of a project. Although the main focus was to motivate a user to run all four tests, we decided to remove the quick run option. This was due to its attraction to a majority of users and the production of less accurate results compared to the full profile run. We also settled on moving forward with the second screen design, with 60% of participants preferring it over the first screen as it allowed users to understand more about each test.

solution

Key findings

Although it was my first instinct to be as descriptive as possible when adding the new tests into PCMark 10, I learned that text should be used primarily as a guide rather than information to reduce clutter. I also found it extremely important to review the main objectives of the project frequently as stakeholder needs often change.